I love the broad pattern-recognitions of the ol’ gray matter – the simple connections made between thises and thats, spreading inexorably from sites of stimulation and parellel processing, intersecting with ever-larger patterns to create surprising and enriching tangents and leading to great “Ah-HA!” moments.

I had such a moment, of a remarkable nature (which is why I’m remarking on it I suppose) last week while I was reading through a Matter article on “The Charisma Coach” (I highly recommend checking out the magazine in general – the long-form articles have not yet failed to be intriguing, and I’m happy to have been in the kickstarting crowd). Anyway, the topic of learning charisma and people skills is interesting in its own right, and follows a significant trend in moving the concept of successful execution from the extraordinary skills of an individual to the attitudes of connected groups (“Team Geek“, “How NASA Builds Teams“, “Delivering Happiness“, “Tribal Leadership“, etc.). That’s not particularly new – this is a trend that’s been evolving for a while, and on which I’ve been keeping a keen eye even when struggling with it myself. I readily admit that I’m not the most social person, and have primarily attributed/excused these tendencies by way of being deeply introverted (“people time” exhausts me) and dealing with chronic pain (which lent me an attitude that life is too short to put up with some kinds of crap, such as disingenuousness or celebrated mediocracy, especially when there are under-appreciated park benches out there what need sitting on) – which are weak, selfish excuses that short-change others, but I’m working on it.

Anyway, I got to the part about the puppy: a cognitive shortcut recommended by the profiled coach to switch expression and communication modes to something warmer and friendlier by someone otherwise feeing impatient and condescending – in this case, by lending him a puppy. This as a means of engaging different patterns of relating and fulfillment, and making it easier to incorporate the target frame of mind into the desired context, modifying the outward behavior as a result.

That’s not new either – this is a form of Cognitive Behavioral Therapy (CBT), which frequently uses functional exercises as a means of shifting context to emphasize preferred behaviors and perspectives. But something about it clicked this time, intersecting with tangents from other reading I’ve done over the last couple of years (“Willpower“, “Thinking Fast & Slow” – this latter one being my favorite of all the cited works thus far). These books introduce the concept of cognitive energy – and not in a metaphysical woo-crap kind of way, but in terms of literal metabolic respiration, fatigue, and refractory periods. “Willpower” especially, based on its (sound, well executed) studies determined that there is no great reservoir of human resolve that can be deepened through exercise, strengthened through exposure, or is particularly inherent to character. This finding runs contrary to commonly inferred attributes of the American Success narrative, wherein one can overcome all obstacles through herculean application of self, and that character is the greatest personal attribute (or collection of attributes). It’s a nice idea because it underscores the idea of being in control of one’s own destiny, but it’s a fiction (which is not to say that we don’t have control [of a sort – luck still probably plays the biggest role], but that the means by which we do so differ).

Instead, those “characters” of seemingly endless resolve have simply created sets of cognitive/behavioral short-cuts that fire automatically: rather than engaging with a situation directly, reasoning, rationalizing, and struggling through it, it’s delegated to a pre-established set of mental patterns and tools which can do so with little oversight (a subconscious, or “System 1” behavior in the terms of “Thinking Fast & Slow”). They retain the precious and scarce resource of cognitive energy for other things – ideally, for creating new automatic behaviors in a virtuous cycle of reinforcement (note however that this is where bad habits come from too – caveat empty[sic] and all that).

Right, so, the epiphany? I’ve seen lots of CBT-ish approaches to situations: visualization exercises, statements of affirmation, personal ritual, etc. Often used by well-meaning individuals, probably more often used by sales-dudes and self-help gurus, and most irritatingly used by manipulative bosses and woo-peddlers. In most cases that I’ve encountered them I felt immediately uncomfortable: it was obvious that the exercise in question was meant to manipulate context, and more often than not was meant to justify (or remove perceived excuses from engaging in) activities or behaviors which could not possibly be sustained for very long. Not only living like there were no tomorrow, or whipping up crowd energy and excitement, but creating excuses for pushing too hard – I had a boss once who likened our situation of prepping for a marketing summit as reacting to an incoming ICBM: and in that circumstance, everything other than averting disaster was a secondary and expendable consideration (family, sleep, health, etc.). Only, of course, there was no missile, and conflating the completion of brochures and organization of media materials with horribly explosive death doesn’t exactly square up (or if they do, I would argue that your priorities are probably off the mark as far as functional members of society go). Unsurprisingly I left shortly thereafter – I wasn’t a fan of all the impending apocalypses or what they (unsustainably) required of me (including the marginalization of family – which is just not allowed).

The puppy exercise cued something for me – the concept of the context shift toward desired behaviors that were already established in a low energy mode, thereby preserving rather than extracting energy. The realization was probably due to this being the exercise and perspective of a fellow introvert, rather than of a crowd-pleasing extrovert, and with the shift-mechanism being a recognizable pattern for me; extroverts I’ve seen were more likely to introduce shortcuts that worked for them but which were foreign to me, and therefore cognitively expensive and unrealistic. It sounds straightforward, especially written out, but this created a mental pivot that opened up (and/or connected) several new lines of thinking that are making accessible previously unavailable considerations.

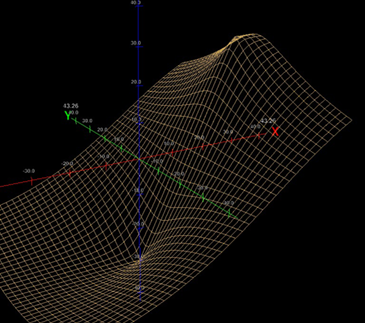

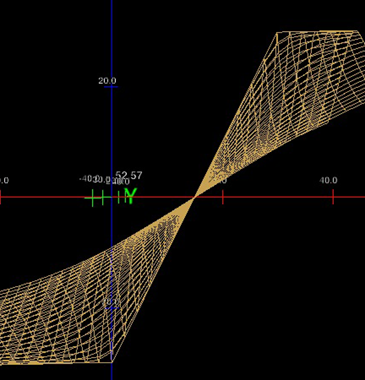

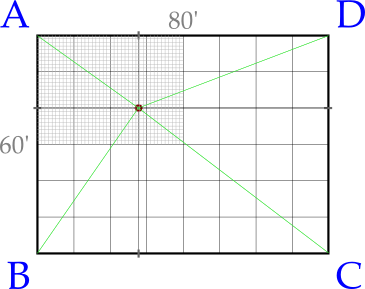

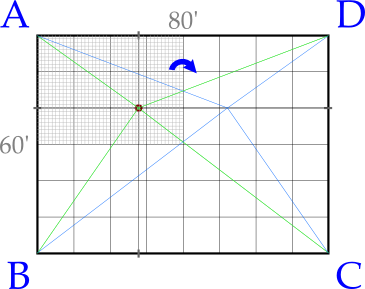

As a similar tangent this made me think of the high frequency of different mental routes from one place to another. Or perhaps I should say “nervous” routes, since neural networks (physical ones) act more like consolidating grids than strict tree hierarchies, and there’s usually several different ways for A to get to B, whether it be in sensory networks or all the way up to the neocortex. Specifically I was reminded of the experience of Scott Adams, the Dilbert Cartoonist, in his struggle with Spasmodic Dysphonia, and how engaging different modes of thought would periodically restore his ability to speak: by finding different routes from speech center to basal ganglia which were not compromised by the neural dysfunction at that moment (the higher-order rationalization of the re-routing into “rule” sets is fascinating too).

What kinds of pockets of low-cost, context-appropriate functionality are out there to be tapped into? This is largely the strategy of hypnosis and neurolinguistics, so you’d think I would have figured this out earlier, but I always thought about it more in the abstract terms of establishing desired patterns rather than finding and interlinking existing ones (though I can see some of this in the underlying Ericksonian methodologies). Thinking about it more at the practical and functional level is fascinating.

Maybe now I can figure out how to manage some “people time” without feeling like I’ve had to compromise on protection of personal identity (and sweet, sweet, brain juice reserves). Perhaps I can find ways of dealing with others whose value systems run more contrary to my own without feeling like I’ve been made to endorse lukewarm insight as genius, or play cloak-and-dagger politics.

Sorry if I’ve been a jerk – per the above, it’s not you, it’s me (unless it is you, in which case knock it off already).